Blog

Insights and Technology

Our story, vision and perspectives on technology, design and business solutions.

Featured Articles

News

5 min read

Announcement : Spiria is certified SOC 2 Type 2

<div><h2>What is the certification SOC 2 ?</h2><p>SOC 2 (Service Organization Control 2) certification is a standard developed by the American Institute of Certified Public Accountants (AICPA) that assesses an organization's ability to manage the risks associated with the security, availability, processing integrity, confidentiality and privacy of the data it processes on behalf of its customers.</p><p>SOC 2 certification is based on five principles, known as trust criteria, which define the minimum requirements an organization must meet to ensure the security and quality of its services. These criteria are as follows:</p><ul> <li><strong>Security</strong>: the organization protects data against unauthorized access, modification, disclosure, damage or loss.</li> <li><strong>Availability</strong>: the organization ensures the availability and continuous operation of its services in accordance with customer agreements.</li> <li><strong>Integrity of processing</strong>: the organization processes data in a complete, valid, accurate, timely and authorized manner.</li> <li><strong>Confidentiality</strong>: the organization respects confidentiality commitments and obligations towards its customers and third parties concerning the data it processes.</li> <li><strong>Privacy protection</strong>: the organization respects the privacy principles defined by the AICPA and the laws in application concerning the collection, use, storage, disclosure and disposal of personal data.</li></ul><p>« Obtaining and maintaining the SOC 2 certification is to me like an ultramarathon, rather than a 100-meter sprint. It's a first step in a long and continuously evolving process. Cybersecurity, as a whole, requires rigour and constant attention to detail, which our team is ready to invest in. »</p><p>– Vincent Huard, Vice President of Data Management and Analytics</p><p>To receive the SOC 2 certification, an organization must undergo an independent audit by a qualified accounting firm to ensure that it complies with the trust criteria applicable to its services. The audit covers the conception and effectiveness of the controls put in place by the organization to ensure compliance with the five trust criteria.</p><h2>What is the difference between SOC 2 Type 1 and Type 2 ?</h2><p>There are two types of SOC 2 certification. Among other things, it is the duration of the audit that distinguishes them. SOC 2 Type 2 is covered by a more extensive and rigorous audit.</p><ul> <li>SOC 2 Type 1 certification attests that the organization complies with trust criteria on a given date. It assesses the conception of controls, but not their effectiveness over time.</li> <li>SOC 2 Type 2 certification attests that the organization meets the trust criteria over a defined period of time, generally from three to twelve months. It assesses not only the conception but also the effectiveness of controls, taking into account their actual use and evolution.</li></ul><p>In other words, SOC 2 Type 2 certification meets more demanding and rigorous criteria, as it involves continuous monitoring and regular verification of controls. It offers greater assurance of the quality and security of the services provided by the organization.</p><h2>What are the benefits for our clients ?</h2><p>By obtaining the SOC 2 Type 2 certification, Spiria reaffirms its position as a trusted partner in the development of digital solutions for its customers.</p><p>Here are some of the main benefits that enable our customers to undertake large-scale projects with peace of mind:</p><ul> <li>The guarantee that we uphold the highest standards of data security.</li> <li>The guarantee that we protect our customers' data against internal and external threats.</li> <li>The confidence that we ensure the availability and performance of our services.</li> <li>The confidence that we are able to react quickly and effectively in the case of an incident.</li> <li>The certainty that we treat your data with integrity, while complying with validation, accuracy, traceability and authorization rules.</li> <li>The peace of mind that we respect your confidentiality obligations and do not disclose your data to unauthorized third parties.</li> <li>The security of knowing that we respect privacy principles and comply with applicable laws on personal data.</li></ul><p>SOC 2 Type 2 certification is a guarantee of trust and security for our clients, testifying to our commitment to delivering quality services and upholding industry best practices. It represents excellence in data security across industries, and is becoming increasingly sought after for software development projects. It was therefore only natural for Spiria to be one of the few expert firms in North America to be certified.</p><p>We are proud to be certified and to guarantee the excellence, reliability and rigor of our business practices.</p><p>Start a project with confidence : <a href="mailto:NewProject@spiria.com">NewProject@spiria.com</a>.</p></div>

Strategy

5 min read

Choosing Between a Time-and-Materials or a Fixed-Price Contract

<div><p>Spiria teams have thorough and extensive experience with both types of projects. In this blog, we’ll share what we have learned on the subject over the years and what criteria contribute to the success of each option.</p><p>But first, let’s go over those two types of projects:</p><h3>Time & Materials projects</h3><p>These are projects whose scope (activities, deliverables, inclusions and exclusions, etc.) are moderately well defined. The initial proposal provides an estimated price range for completing the project, after which costs are billed based on actual hours worked plus the required hardware and resource expenses (such as software licenses or cloud services). This approach is more flexible, as it allows both parties to adjust or change the specifications throughout the development process. This encourages agility and puts an emphasis on project management controls.</p><h3>Fixed-price contracts</h3><p>In contrast, the scope of this kind of project is usually well or very well defined. The initial cost estimate can be stated with confidence because it is based on more reliable information than in the T&M project. As the name suggests, costs are established at the outset, regardless of the actual hours worked and the materials and other resources expenses. Therefore, risk and profitability are critical considerations in opting with this type of contract. Any change to the initial specifications is policed by a change-request process and is billed as additional work.</p><p>Let’s imagine a first scenario in which a project has been previously defined. The client would opt for T&M or Fixed-price, a decision sometimes dictated by the organization’s internal requirements or even by industry regulations. This is often the case with calls-for-tender, which are mostly Fixed-price. Whenever possible, Spiria suggests an approach that leads to a better understanding of the project’s scope, thus mitigating risk. Spiria could recommend that the client invest in an initial discovery phase, whether in T&M or in Fixed-price mode, then propose the actual development and deployment phases as Fixed-cost. This helps the client assess whether it needs to change priorities or modify the scope as a result of the discovery phase. This flexibility allows us to negotiate the defined scope while amending the inclusions/exclusions, in order to remain within the agreed contractual Fixed-cost budget.</p><p style="text-align: center;"><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/11800/process-en.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/11800/process-en.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/11800/process-en.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/11800/process-en.webp" style="width: 60%; border: none;" alt="A Typical Project Cycle." title="A Typical Project Cycle."></source></source></source></picture></p><p style="text-align: center; font-style: italic;">Figure 1. A Typical Project Cycle.</p><p>In a second case where the type of contract is not predetermined, we have more latitude to choose our strategy. A client schedules meetings with various suppliers for a Q&A session, followed by internal discussions to evaluate the factors leading to the best strategy. To help the teams decide, the table below presents a non-exhaustive list of criteria that are quantifiable (easily identifiable and measurable) or qualitative. The answers will depend on the information provided during the initial meetings and in the specifications, and on information obtained by asking the client directly. The symbols in the two right-hand columns suggest ways to weigh the answers relative to the two types of projects.</p><table cellpadding="0" cellspacing="0" style="width:100%"> <tbody> <tr> <td style="width:76%"><strong>Points</strong></td> <td style="width:12%"><strong>Fixed</strong></td> <td style="width:12%"><strong>T&M</strong></td> </tr> <tr> <td>The business plan, requirements, needs and expectations are clear.</td> <td>➕➕</td> <td>➕</td> </tr> <tr> <td>The business rules and processes are numerous and complex.</td> <td>➕</td> <td>➕➕</td> </tr> <tr> <td>The client’s budget is defined and budget planning is set.</td> <td>➕</td> <td>➖</td> </tr> <tr> <td>The schedule is tight or critical due to the client’s circumstances or business context.</td> <td>➕</td> <td>➖</td> </tr> <tr> <td>The required expertise is clearly defined.</td> <td>➕</td> <td>➕</td> </tr> <tr> <td>The organizational and decision-making structure is large and complex.</td> <td>➖</td> <td>➕</td> </tr> <tr> <td>The legal aspects are complex.</td> <td>➖</td> <td>➕</td> </tr> <tr> <td>A past relationship already exists, or a mutual contact recommended us.</td> <td>➕</td> <td>➕</td> </tr> <tr> <td>The risk, uncertainties and contingencies are high.</td> <td>➖</td> <td>➕</td> </tr> <tr> <td>There is a high likelihood of scope-creep.</td> <td>➖</td> <td>➕</td> </tr> <tr> <td>The client has staff or other internal capacity<br> (designer, development team, QA, etc).</td> <td>➕</td> <td>➕</td> </tr> <tr> <td>The technological environment is familiar.</td> <td>➕</td> <td>➕</td> </tr> <tr> <td>There are significant technological constraints (e.g. legacy system).</td> <td>➖</td> <td>➕</td> </tr> <tr> <td>There are many and complex challenges to integrating the solution.</td> <td>➖</td> <td>➕</td> </tr> <tr> <td>The choice of technology is pre-established.</td> <td>➕</td> <td>➕</td> </tr> <tr> <td>Data is available to reliably do quality assurance.</td> <td>➕</td> <td>➕</td> </tr> <tr> <td>The solution is subject to special certifications.</td> <td>➖</td> <td>➕</td> </tr> </tbody></table><p><br>This reflection can lead to different approaches, represented in the following diagram:</p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/11800/strategies-en.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/11800/strategies-en.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/11800/strategies-en.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/11800/strategies-en.png" style="width: 100%; border-style:solid; border-width:1px;" alt=" Possible strategies or approaches." title=" Possible strategies or approaches."></source></source></source></picture></p><p style="text-align: center; font-style: italic;">Figure 2. Possible strategies or approaches (click to enlarge).</p><p>The strategy selected dictates how the contract agreement is concluded and has implications for the entire life of the project and its final success. The relationship will start out on the right foot if our process is transparent and we can explain our reasoning to the client. Our ultimate objective is to deliver a project that respects our Spirian values and that provides the expected value to the client.</p></div>

All Articles

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Dev's Corner

5 min read

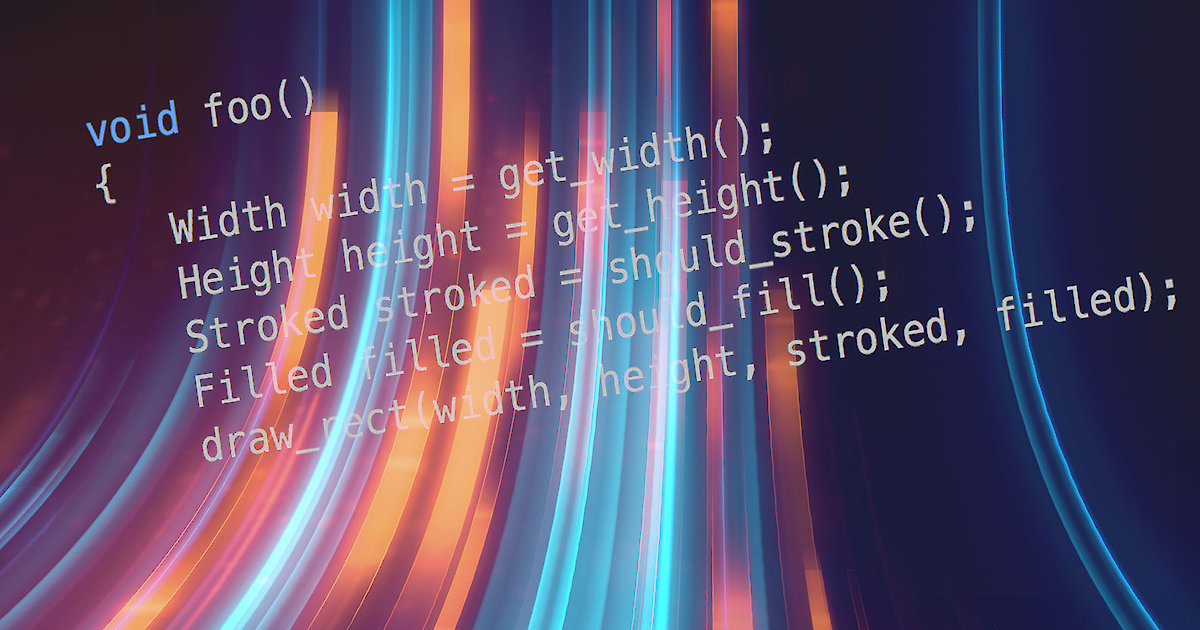

Hypothetical C++: extensible tagging

<div><p>C++ has access to a rich set of tools to design types and create this vocabulary. Its type system is open-ended and allows programmers to define new types as needed. Yet there is one aspect in which it is closed: type qualifiers.</p><p>C++ provides two type qualifiers: <code>const</code> and <code>volatile</code><sup><a href="#footnotes">(1)</a></sup>. They allow us to give additional meaning to an existing type without having to create a separate type manually. The <code>const</code> qualifier is the most used, of course. If it didn’t exist, having to reproduce its behaviour for every type we create would be tedious. Without it, C++ would be a lot less expressive and harder to reason with. And yet, this expressive power is limited to these two qualifiers. There is a disconnect between their usefulness and our inability to create new ones.</p><h2>User-Defined Qualifiers</h2><p>What I’d like to see in C++ is the opening of qualifiers to user-defined ones. I’ll give you a few examples of what can be achieved with user-defined qualifiers, but let’s first describe how they would work. Quite simply, they would work just like <code>const</code> and <code>volatile</code>. That is:</p><ul> <li>Qualify a type or a member function.</li> <li>An optional automatic one-way equivalence could be declared, like how you can pass a non-<code>const</code> pointer to a function taking a <code>const</code> pointer.</li> <li>Forced conversion between the qualified and non-qualified type using a <code>const_cast</code><sup><a href="#footnotes">(2)</a></sup>.</li></ul><p>I do not wish to focus on the details of a hypothetical syntax. I think this detail is unimportant and everyone could come up with something. I’ll just show one possible choice using a new <code>typequal</code> keyword:</p><pre><code>typequal NewQualifier;typequal NewQualifier auto qualified;typequal NewQualifier auto non-qualified;typequal NewQualifier invalid;typequal NewQualifier invalid auto qualified;</code></pre><p>Each of these examples would create a qualifier named <code>NewQualifier</code>. The “<code>auto qualified</code>” variant means that the qualifier can be added silently to a type when assigned to a variable in the same way that the <code>const</code> qualifier works. The “<code>auto non-qualified</code>” variant allows the automatic conversion in the opposite direction. What about the “<code>invalid</code>” variant? This declares that data with the qualifier cannot be accessed. As you will see, this is a useful feature.</p><h2>Payoffs</h2><p>Now, I want to convince you of the usefulness of this feature. Let’s try to solve multiple problems that cause real bugs in actual programs.</p><h3>1. Null Pointers</h3><p>Let’s start with <code>null</code> pointers. Dereferencing <code>null</code> pointers is a major source of bugs. Having nullable pointers is often decried as a major design blunder in the language. But the real problem is not the <code>null</code> pointer. It’s the fact that the language does not prevent us from using a <code>null</code> pointer. Let’s fix that:</p><pre><code>typequal maybe invalid;</code></pre><p>That’s it. Now, every function that produces a pointer should produce a pointer with the underlying type adorned with the <code>maybe</code> qualifier. Given that it is declared as an invalid-marking qualifier, the language will not allow us to use the data. Once you have tested for <code>null</code>, you can <code>const_cast</code> it to remove the <code>maybe</code> qualifier. Of course, it would be even more practical if the language supported such a qualifier natively, so that built-in functions would take and provide pre-qualified pointers.</p><h3>2. Invalid Data</h3><p>Similar qualifiers can be used to describe different states of data. Two examples that often come up in code would be:</p><pre><code>typequal invalidated invalid auto qualified;typequal tainted invalid auto qualified;</code></pre><p>The first could be used to mark data when it has not yet been validated against a desired constraint. Often, a given group of functions will impose such constraints on its input. By having the entry-point function take <code>invalidated</code> data and internal functions taking unqualified data, we can insure that internal functions cannot be called without the data being validated.</p><p>The second one is an idea borrowed from Perl: that <code>tainted</code> data cannot be trusted. It is similar to <code>invalidated</code>, but instead of merely not conforming to some constraint, it is to be entirely treated with suspicion. In Perl, such <code>tainted</code> data comes from web data, email data and other such untrusted sources. Additional precautions should be taken when validating the data.</p><h3>3. State of Data</h3><p>Of course, such a system of validation can be extended to support multiple states to reflect the progression of an algorithm. Or it can reflect different types of validation. Here are a few ideas:</p><pre><code>// The data has been sorted and can be binary-searched.typequal sorted;// Sort a vector and return the same vector with the qualifier.sorted vector<int>& sort(vector<int>& unsorted_vector);// Do a binary-search in a vector, but only if it has already been sorted.bool find_in_data(const sorted vector<int>& sorted, int value);// The data is shared between threads.// You would create an instance of Lock that would// take the shared data and mutex as arguments and// do the const_cast to remove the shared qualifier.typequal shared invalid;// Which coordinate system is used in a 3D algorithm.// Avoids error of using a local point in an algorithm// working in world coordinates.typequal local;typequal world;typequal view;typequal screen;// Applying the corresponding matrix would return the// vector with the qualifier correctly updated.world vector& apply_world_matrix( local vector&, const local world matrix&);</code></pre><h2>Conclusion</h2><p>My goal was to show you the advantages of adding user-defined qualifiers to the C++ language. As demonstrated, user-defined qualifiers open a new world of possibilities with various benefits:</p><ul> <li>Being more expressive with existing data types.</li> <li>Clearly representing the state of the data to the code reader.</li> <li>Following the progression of changes made to data.</li> <li>Allowing the compiler to enforce various constraints.</li> <li>Avoiding bugs that arise from incorrect data passing to functions.</li></ul><div><p><br> </p><hr><p><sup>(1)</sup> There are also the <code>restricted</code>, <code>register</code> and <code>auto</code> type modifiers, but they do not exactly behave like <code>const</code> and <code>volatile</code>.</p><p><sup>(2)</sup> Although it would be more elegant to rename it to <code>qualifier_cast</code>.</p></div>

Design

5 min read

Impressions of CanUX 2018

<div><p>The largest UX event in Canada, aptly named CanUX (pronounced “<i>canucks</i>”), takes place every year in November. Twenty speakers from all over the world share their perspective and practice of various aspects of <a href="https://www.spiria.com/en/services/human-centered-design/user-experience-design/">user experience</a>. With over 520 participants from every background, it makes for a stimulating conference! This year, I was lucky enough to be able to attend this event for the first time, at the Canadian Museum of History in Gatineau, Quebec.</p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-01.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-01.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-01.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2860/canux2018-01.webp" style="width: 100%; border-style:solid; border-width:1px;" alt="Canadian Museum of History." title="Canadian Museum of History."></source></source></source></picture></p><p>Thanks to top-notch planning, my overall experience as an audience member was very enjoyable. Particular attention went into making participants who were attending alone feel welcome. In fact, the whole event was specifically designed to support interaction and discussions among attendees. At every break and during down times, we were offered snacks and for lunch, we were divided into groups and assigned a restaurant close to the Museum. This allowed us to meet and talk to new people. The scheduling was to-the-minute. All the information about the two days of the meeting was clearly presented in the handbook that was handed to us at the beginning of the event and that doubled as an ID badge.</p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-02.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-02.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-02.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2860/canux2018-02.webp" style="width: 100%; border-style:solid; border-width:1px;" alt="CanUX 2018." title="CanUX 2018."></source></source></source></picture></p><p>Having attended many presentations, I found some of them captivating, and some less relevant. Here are the two most memorable ones:</p><h2>Digital Life after Death</h2><p>This touching presentation by <a href="https://canux.io/alberta-soranzo/">Alberta Soranzo</a> got us thinking about what happens to people’s social network accounts after death. Should there be laws to require the closing of these accounts after someone is deceased? To allow users to indicate in their profile settings how they want their accounts handled after they die? Should the next-of-kin have access to the account information after the death of their loved one? Most likely, no one wants their private on-line conversations to be disclosed to anyone. The speaker gave a concrete example of her own Facebook account which, year after year, reminds her of the birthday of a deceased person. Well-meaning Facebook friends unwittingly wish that deceased person a happy birthday. Linkedin, for its part, still suggests that she connect with this person, five years after their death.</p><p>As a designer, I learned from this presentation that creating a positive <i>offboarding</i> experience is just as important as the <i>onboarding</i> experience. You have to think the experience through from the beginning right up until the very end.</p><h2>Prison Door Controls</h2><p>In his presentation, <a href="https://canux.io/mark-bottomley/">Mark Bottomley</a> showed us the dashboard of a control desk in a correctional facility. This presentation underscored the importance of examining the way a system is used in context: physical environment, types of users, types of doors, security level, daily operations including emergency situations. For example, a locked door shows up as green, whereas an unlocked one is displayed in red. As an added precaution, a symbol is superimposed on the colours for people who are red-green colour-blind. Finally, the doors are controlled by physical, mechanical buttons rather than by touch-screen buttons, because the operator must keep his eyes fixed on the prison guard, who gives him a sign when the door can be released. Finally, there are several different types of lock settings: a door can be locked for everyone, or just in one direction--for example when detainees lock their door when leaving their cell to deter theft. These scenarios played out with a demonstration of the system. Obviously, the risk level of such a system is extremely high; it just goes to show that all decisions must be based on users and context. I particularly enjoyed this presentation because this type of system is so specialized and unusual.</p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-03.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-03.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-03.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2860/canux2018-03.webp" style="width: 70%; border-style:solid; border-width:1px;" alt="A great designer convinces you half that shit is unnecessary." title="A great designer convinces you half that shit is unnecessary."></source></source></source></picture></p><p>In general, I found the presentations to be interesting and informative. <a href="https://canux.io/mike-monteiro/">Mike Monteiro</a> made a witty presentation on a serious issue: the ten golden rules of a designer. For example: “A good designer finds an elegant way to put everything you need on a page. A great designer convinces you half that shit is unnecessary”.</p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-04.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-04.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2860/canux2018-04.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2860/canux2018-04.webp" style="width: 70%; border-style:solid; border-width:1px;" alt="Rose-Marie Bouthat." title="Rose-Marie Bouthat."></source></source></source></picture></p><p>A good designer must be curious and ask questions. The importance of understanding the user and being aware that others think differently than we do was brought up time and time again in many different presentations. I also learned the importance of not being wedded to our ideas. We must be able to cast them aside when they don’t work, the important thing being to remain flexible and to adapt. I returned to the office with tons of good ideas and with the determination to apply them to my projects. Would I go back in 2019? I’d love to, though I think I’d get more out of it if two of us attended, so that we could bounce ideas off each other and debate the various issues, applying them to our context at <a href="https://www.spiria.com/">Spiria</a>.</p></div>

Dev's Corner

5 min read

Hypothetical C++: easy type creation

<div><p>When I look at typical C++ code, I see a lot of uses of simple built-in types: <code>int</code>, <code>float</code>, <code>double</code>, <code>std::wstring</code>, <code>const char *--</code> even though in each instance they may be used for wildly different purposes. One may be a width or a height, another a temperature, a third, a range limit.</p><p>The reason programmers go for these simple types is easy to explain: they’re built-in, they have a lot of support functions, and their behavior is well known. But there is another reason behind their ubiquity: programming languages make it a chore to create a new type with enough functionality.</p><p>What if this weren’t the case? What I’d like to see in C++ is an easy way to create a new type from an existing one.</p><h2>The current state of affairs</h2><p>C++ has the <code>typedef</code> keyword, but that merely creates a synonym for an existing type, and the two are interchangeable. You cannot create <code>Celsius</code> and <code>Fahrenheit</code> types from <code>double</code> safely with a <code>typedef</code> because nothing will prevent adding <code>Celsius</code> to <code>Fahrenheit</code>.</p><p>On the surface, this property of <code>typedef</code> seems useful. If all we need is a short-hand version for a long type declaration or a semantic name for a type, <code>typedef</code> fits the bill. Or does it? Using a pure short-hand version saves some typing but does not introduce meaning. On the other hand, giving the <code>typedef</code> a semantic name, for example <code>ListOfPeople</code>, helps understand the code, but does not prevent assigning a list of file-names to it by mistake.</p><p>The current way to avoid such typing errors is to wrap the type in a class. The downside is that you have to painstakingly forward every member function, or create a lot of operators. The fact that this solution exists and yet is rarely used should tell us something. Code is still littered with <code>ints</code> and <code>doubles</code> and <code>typedef</code>, because they’re easy to create.</p><p style="display: block;padding: 0.5em 2em 0.7em 2em;margin: 1em 0 2em 0;background-color: #fff;border-radius:4px;box-shadow: 1px 7px 15px 0 rgba(47,57,64,0.2);text-align: center;"><b><i>Did you know that?</i></b><br>Spiria’s teams have a long experience in the development of complex <a href="https://www.spiria.com/en/services/purpose-built-development/desktop-application-development/">desktop applications</a> and can help you on any large-scale project.</p><h2>Easy Type Creation</h2><p>We need something as simple as a <code>typedef</code> which will actually create a new type. Let’s call it <code>typedecl</code>. Ideally, it would be so simple to use that programmers will reach for it by default. The barrier to entry should be as low as possible. Here’s what we need <code>typedecl</code> to do:</p><ol> <li>Create a new type.</li> <li>Allow easy-declaration of literals.</li> <li>Allow basic internal functionality automatically.</li> <li>Allow easy additional external functionality.</li></ol><h3>1. Create a new type</h3><p>Creating a new type is easy. Just make it the definition of <code>typedecl</code> in the language. With a new type, we avoid accidental assignments and allow function overloading. Taking our <code>Celsius</code> and <code>Fahrenheit</code> example, here are two function declarations that could not be written side-by-side if they were a <code>typedef</code>:</p><pre><code>Celsius convert(Fahrenheit);Fahrenheit convert(Celsius);</code></pre><p>While anyone could come up with a naming scheme to allow this to work with <code>typedef</code>, the fact that you need to come up with such a scheme and, more importantly, that you need to worry about such an issue in the first place, points to the problem of not having a unique type for each.</p><h3>2. Declaration of literals</h3><p>Easy declaration of literals is important for usability. Without usability, the feature would not be used. Somewhat like how a numeric literal will automatically be silently typed as a <code>int</code>, <code>long</code> or <code>double</code> if it fits the limits of the type, the same behaviour should be supported by <code>typedecl</code>.</p><h3>3. Allow internal functionality</h3><p>The need for internal functionality is again to fulfill our need for usability. For example, with numerical types (<code>int</code>, <code>double</code>, ...) we don’t want to have to declare all possible operations between two variables. If it’s tedious, the <code>typedecl</code> won’t be used, just like wrapping an integer in a class is not often used. The same should be true for more complex types that are used as the basis for a <code>typdecl</code>. A <code>typedecl</code> based on a <code>std::string</code> should bring its member functions, with all instances of <code>std::string</code> parameters replaced with the new type.</p><h3>4. Allow external functionality</h3><p>The hardest part is the last: allowing useful external functionality. Once again, how easy it is for the programmer will have a direct influence on how often it is used. It should be easy to clone an existing function for the new type. Ideally, it should be easy to clone a whole group of functions. The syntax I suggest is to reuse the same keyword, <code>typedecl</code>, with a clone modifier. This would allow the cloning of one or more functions. For example:</p><pre><code>typedecl Celsius clone std::abs;typedecl Celsius clone { std::abs; std::pow, ... }</code></pre><p>Ideally, it should be easy to clone an entire namespace, too:</p><pre><code>typedecl Celsius clone namespace std;</code></pre><p>Unfortunately, this is too broad and far-reaching in many cases. Ideally, we would need to add the equivalent of a <code>namespace</code> to C++, without creating an additional identifier while programming, but merely creating a semantic grouping that could be accessed. For example, all trigonometric functions could be grouped under one semantic, all I/O functions under another. Here’s what this hypothetical language feature could look like:</p><pre><code>namespace std{ namespace semantic trig { double cos(double); double sin(double); } namespace semantic io { ostream& operator << (ostream&, double); // ... }}</code></pre><p>With this feature, the <code>cos()</code> function could still be accessed directly within the <code>std namespace</code>. The <code>trig</code> semantic <code>namespace</code> would be allowed, but optional. Cloning all trigonometric functions would then merely be:</p><pre><code>typedecl Celsius clone namespace std::trig;</code></pre><p>In some cases, it may be useful to change only some parameters of a function. In that case we could borrow the syntax of a deduction guide to give the compiler a map of how the automatic conversion should be done. For example:</p><pre><code>typedecl Celsius clone double std::pow(double, double) -> Celsius std::pow(Celsius, double);</code></pre><h2>The payoff</h2><p>Now, I will show a few examples of code improvements that can be achieved with <code>typedecl</code>. First, it can lead to fewer coding errors where arguments to a function are misplaced:</p><pre><code>// Our world...void foo(){ int width = get_width(); int height = get_height(); bool stroked = should_stroke(); bool filled = should_fill(); // Is this call correct? draw_rect(width, height, stroked, filled);}// Hypothetical C++ world...void foo(){ Width width = get_width(); Height height = get_height(); Stroked stroked = should_stroke(); Filled filled = should_fill(); // The order of the arguments is necessarily correct. draw_rect(width, height, stroked, filled);}</code></pre><p>Second, it allows overload or templates to be specialized based on the semantic of a type instead of purely on its mechanical type. This is much better compared to <code>typedef</code>. With <code>typedef</code>, you need to know what the underlying type is to know if an overload or template instantiation really is different. If you used <code>typedef</code> from a third party, you would have to wrap it in a class, with all the interfacing annoyance. As an example, take the <code>std::variant</code> type. It allows you to access its elements by its type, but if two elements have the same type, then there is ambiguity. With <code>typedecl</code>, having different types makes this problem disappear.</p><h2>Conclusion</h2><p>With these changes to C++, we could finally get rid of a lot of incidental usage of purely mechanical types. There would no longer be any reason to use bare <code>int</code>, <code>double</code>, <code>std::string</code>, <code>std::map</code>, etc. in code. We could program with meaningful types that would provide even more type safety than we currently achieve because creating them would be simple.</p></div>

Custom Development

5 min read

Eight things to bear in mind about software development

<div><h2>Find a common language</h2><p>As is the case in many industries, IT professionals have their own language. Developers might use words or refer to concepts that are not familiar to you. Do not hesitate to ask us to explain—it’s our job! Always ask for clarification when things seem obscure. This is crucial, because your understanding is the foundation of quality communication, which in turn becomes the basis for well-informed decisions. Good developers will be happy to share their knowledge with you, to ensure that you understand key concepts.</p><h2>Explain what you do</h2><p>Just as developers explain their job to you, you also need to explain yours to them. Do not be stingy with information; a client can never give too much. The more a team knows about the specifics of your company, your market, and your processes, the better it will understand your expectations, your current activity, and your future plans. A development team that is imbued with your vision is better positioned to provide the best answer to the problem you want to solve and to anticipate your future needs. We can’t say it enough: good two-way communication is crucial to the success of a software project.</p><h2>Take the time it takes</h2><p>Most software projects take weeks or months to complete. Regardless of the number of people on the team, some essential steps will always take time if you care about the quality of the delivered product: planning, design, development, testing. We know that you would like your software delivered to you as quickly as possible, so that you can begin reaping the fruits of your investment. However, there is no magic solution, unless you compromise on features or quality. If your deadlines are really short due to various circumstances, it might be necessary to start with a <a href="https://www.spiria.com/en/blog/custom-development/mvps-your-most-valuable-plan-to-hit-the-market-swiftly/">minimum viable product</a> (MVP)--software that just meets the essential requirements--and postpone the development of some features for a later version.</p><h2>It takes more than programmers</h2><p>Software development involves a wide range of specialists who don’t actually or always write code. It's a team effort: business analysts, designers, interface designers, project managers, Scrum Masters, quality analysts, support staff are all professionals who contribute to making your software exceptional. Some stay with the entire project, others intervene at certain points.</p><h2>Features and quality don’t always go hand-in-hand</h2><p>Focusing on the quantity of features rather than on their quality is misguided. You must question the purpose of each feature. Clearly defining a minimum viable product (MVP) will help you focus on what is really important. During development or after the delivery of a first version, it is quite common to realize that some functions that were envisioned at the beginning of the project are in fact useless, and that leaving them for later ended up saving time and money. And conversely, it is not unusual that initial users’ feedback leads to the enhancement of some functions and the creation of others that had not been envisaged, but now seem obvious. <a href="https://www.spiria.com/en/services/purpose-built-development/quality-assurance-test-automation/">Software quality</a> is not the sum of its features, but rather the measure of user satisfaction. This requires user needs satisfaction, user-friendly and ergonomic interfaces, bug-free software, etc., rather than a program packed with features that will never be used. The piling on of features also adds a layer of complexity that jeopardizes the flow and deadlines of the project. You will save more money by choosing a scalable product with incremental improvements, rather than by attempting to do everything right away, which usually doesn’t work out well.</p><h2>You get what you pay for</h2><p>The same goes for software development as for everything else. A house built with low-end materials will be less durable, and over the years, it will lose value and cost more in maintenance. A fifty-dollar Ikea bookshelf made of melamine chipboard is less solid and durable than hardwood one that costs hundreds of dollars. Everyday life offers many more examples. There are always consequences to making the cheapest choice, to doing things half-way, or to cut corners to go faster. Immediate savings always translate into proportionally higher costs in the medium- and long term. Therefore, if your software is to evolve, it is essential to invest in a robust first version that will serve as a foundation for the next versions. Solid foundations guarantee future savings.</p><h2>The art of change</h2><p>Unless the project is simple, it is almost impossible to plan for everything from the start. A multitude of factors mean that few things remain forever cast in stone. Unpredictability is an intrinsic part of the software development process. Your request may change over time, market conditions or your customers’ expectations may change, marketing imperatives may require you to launch earlier, a complex feature may take more time than expected, changing software environments may require adaptations, etc. Software development requires staying open to changes, because these are inescapable. This is why the software industry has unanimously adopted the Agile approach that makes it possible to manage change in the most economical, secure and flexible way possible.</p><h2>It doesn’t stop at delivery</h2><p>To stay functional and efficient, software requires maintenance after delivery—it’s similar to the building analogy. We call it <a href="https://www.spiria.com/en/services/purpose-built-development/support-maintenance/">postproduction support</a>. You have to determine from the beginning of the project who will do it and how much it will cost, because it takes people to look after your software and anticipate pitfalls. In addition to routine maintenance, such as solving problems that inevitably occur with changes in your hardware and software environment for example, your users will come to you with new requests. In short, software lives on after its delivery and it’s important to understand that at the outset.</p></div>

Custom Development

5 min read

Identify the right software development partner

<div><h2>How do you know a software development company is right for you?</h2><p><b>The company seems genuinely interested in your project</b> and asks relevant questions. They immediately go into solutions mode. If you perceive a lack of enthusiasm or even some hesitation, it doesn’t necessarily mean that they’re not a reliable company. It could mean that your project doesn’t fit with their service offering: maybe they’re seeing a capability gap between your needs and their capacity; maybe it’s a question of budget, of know-how, of experience, etc. Maybe that company just isn’t the one best suited for your project.</p><p><b>The company is located near you.</b> Try to use a local supplier: there’s nothing like direct human contact to assess the fit between your needs and their service offering. Use your favorite search engine to search “software development + name of your city”. You can even be more specific, for example: “web application development + city”.</p><p><b>The company understands you.</b> They speak your language and display business intelligence. Assess your cultural compatibility and the quality of your communication. The success of a software project depends on a good mutual understanding. It isn’t enough to contract the best developers out there; they also have to understand your needs precisely. Your partner must have a thorough understanding of your line of work and business goals in order to turn your ideas into an efficient, effective program.</p><p><b>The company educates you.</b> They take the time to explain what you don’t understand, and continue explaining until you do. They don’t baffle you with a barrage of technical jargon.</p><p><b>The company can say “no”.</b> Be suspicious when a supplier says yes to everything without question. More trustworthy is the supplier who tells you when something isn’t possible, or suggests a different way of achieving your goals. Choose a supplier that opens up new perspectives, that contributes new ideas. In other words, a supplier that can improve on your initial idea, rather than just blindly following instructions.</p><p><b>The company masters several different technologies.</b> If you don’t have specific technical constraints, choose a supplier that can work with a wide range of technologies. This way, they can advise you on the most appropriate technology for your project, and even suggest more efficient or cheaper alternatives.</p><p><b>The company provides the whole range of services.</b> From upstream consulting to downstream service and support, from UX/UI design to <a href="https://www.spiria.com/en/services/purpose-built-development/quality-assurance-test-automation/">QA</a>, successful software development involves far more than just coding.</p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2803/web-dev.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2803/web-dev.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2803/web-dev.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2803/web-dev.webp" style="width: 100%; border-style:solid; border-width:1px;" alt="Web development." title="Web development."></source></source></source></picture></p><p><b>The company uses flexible methodologies.</b> Be it Cascade, Agile, Scrum, Kanban, or a blend of the above, there are many ways to conduct a software project. Your supplier should be able to use the method that best suits your project, its scope and objectives, rather than box you into a one-size-fits-all methodology.</p><p><b>The company provides credible references.</b> Any software development company worth its salt proudly displays concrete examples of its past projects on its Web site. This is a good way for you to assess their experience, the variety of their projects and the size of their clients. Don’t be shy about poking your nose in their portfolio of jobs. If you have any doubt about any of their references, check them out, just as you would for a new hire.</p><p><b>The company’s size is similar to yours.</b> Generally speaking, you’re better off being your supplier’s most important client, in order to receive VIP treatment. If the company is a large multinational and you’re an SME, you run the risk of being lost in the crowd. On the other hand, if your supplier is a much smaller company than yours, it may not be able to offer you the whole range of services and support you need for growth.</p><p><b>The company isn’t the lowest bidder.</b> When it comes to development, cheap is not good. Huge price differences between bidders usually mean that at least one of them hasn’t properly estimated the development effort and technical challenges involved. Don’t be afraid to grill the bidders on specific points of their bid to probe the credibility of their offer and ensure that they fully understand the nature of your requirements. Too low a bid practically guarantees failure: basic needs unmet, late delivery, technological debt, poor coding quality, etc.</p><p><b>The company makes you want to work with them.</b> It may seem silly, but affinity is essential, since the success of any software project, especially if it is meant to solve a complex business problem, rests on the quality of your collaboration throughout the development process. You must feel comfortable with them right off the bat, and feel that you’ll be able to work with them successfully and harmoniously over the long term. Remember, you’re going to have to work with them for months!</p></div>

Best Practices

5 min read

Agile: less recipes, more state of mind

<div><p>The first tenet of the Agile Manifesto is that it focuses on people and their interactions rather than on the methods and tools, to counteract what usually happens in a traditional setting, where processes and tools reign supreme.</p><p>Agile has become a business, even fostering an entire industry, with specialized companies, consultants, and software vendors all promising the moon and the stars, to their own benefit: adopt our methods and your productivity will soar. Then everyone thinks they’re Agile because they use the methods, tools, terminology and rituals. It goes without saying: a practice followed to the letter but not in spirit doesn’t cut it.</p><blockquote style="text-align: center; font-size: 1.2em !important; font-style: italic;"><p>“Individuals and interactions<br>over processes and tools.”</p></blockquote><p>Besides living in an era of “fake news”, we’re also in an era of “fake agility” when it comes to software development. Have you read the manifesto for Agile software development yet? You’ll be hard-pressed to find anyone in a development team who has done so, or who can recite any of the four values or twelve underlying principles. Some companies call themselves Agile without having the least notion of what Agile culture is. Others have the best intentions but get stuck micromanaging and lose sight of the overarching goals. They don’t really get that Agile is a state of mind. Some teams are honest: “Sure, we do sprints and dailies. We assign points to stories. We have a scrum master who’s actually a project lead. That’s about as far as it goes.”</p><blockquote style="text-align: center; font-size: 1.2em; font-style: italic;"><p>“Our highest priority is to satisfy the customer<br>through early and continuous delivery<br>of valuable software.”</p></blockquote><p>Agile is an outlook that rests on four values and defines itself according to twelve principles. It’s a sort of philosophy coupled with moral principles that translates into an infinite number of practices. Agile is not like XP, a box of tools that you can choose from according to your needs. It’s a holistic thought pattern that guides practise with key objectives, such as client satisfaction. A satisfied customer doesn’t care whether you faithfully do your 15-minute stand-up each day. The question you should be asking is, “Does our daily stand-up get us closer to our goal? Is it helpful or is it just a waste of time?” Is the team thinking about ways to be more productive and then adjusting its behaviour accordingly (12<sup>th</sup> principle)? Or is it just performing empty rituals without asking itself whether they’re furthering the project, and never trying new ways of doing things? Is Kanban perhaps more appropriate for this project?</p><p>Those are the kinds of questions that reveal a true understanding of Agile. Blindly following methodologies and prescriptive rules doesn’t demonstrate you can adapt rather than dumbly follow a plan (4<sup>th</sup> value in the Manifesto, summarized as “pragmatism”).</p><blockquote style="text-align: center; font-size: 1.2em; font-style: italic;"><p>“Responding to change<br>over following a plan.”</p></blockquote><p>The point of Agile is not to fit people in a mould, forcing them to follow practices unquestioningly, without a concern for how they think, interact, or collaborate. Again, the first value of the Manifesto is clear: individuals and their interactions are at the heart of the methodology, and tools and procedures are secondary. The latter are always in the service of the former.</p><p>The origin of the term Agile, and what makes it superior to the cascade model, is its emphasis on adaptability to change. Using the same practices and tools day in day out, on all projects, no matter their scope and specificity, is not a sign of adaptation, but of inflexibility. A team can lift the practices that have worked in other situations, or it can create its own methods for the current context. Meaning a team must be allowed to find its own groove. When thinking through Agile practices, the only hard limit is the ultimate goal, the Manifesto principles’ highest priority: swiftly and regularly deliver great value-added functionalities to satisfy the client. If the client isn’t satisfied, check the fundamental soundness of your practices.</p><blockquote style="text-align: center; font-size: 1.2em; font-style: italic;"><p>“At regular intervals, the team reflects on how<br>to become more effective, then tunes and adjusts<br>its behaviour accordingly.”</p></blockquote><p>Finally, all team members must know and understand the Agile Manifesto and soak in its philosophy. Get used to reading and rereading the Manifesto to fully understand the purpose of its precepts, to discuss them in a group so that everyone is on the same page. Keep in mind that Agile is above all an evergreen concept to be constantly nurtured and rethought.</p></div>

Dev's Corner

5 min read

Building a web radio with a Raspberry-PI

<div><div><p>A mix of past and modern technology, this project was born out of the purchase, for a handful of dollars at a yard sale, of a superb cabinet (completely empty) of a 1933 Emerson radio set.</p><p>So, after some small restoration works, we are now ready for the installation of an ultra-modern Raspberry-pi !</p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200847.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200847.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200847.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2672/20151011_200847.jpg" alt="decorative"></source></source></source></picture></p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200855.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200855.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200855.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2672/20151011_200855.jpg" alt="decorative"></source></source></source></picture></p><h2>1. The shopping list</h2><ul> <li><a href="https://www.raspberrypi.org/products/model-a-plus/">A rapsberry-PI model A+</a></li> <li>One SD card</li> <li>A WiFi USB dongle</li> <li>A 12-position circular switch</li> <li>A 10K potentiometer equipped with a switch</li> <li>A 5V 500mA power supply</li> <li>One KIT Low frequency amplifier</li></ul><h2>2. The schema</h2><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/radio_schamatic.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/radio_schamatic.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/radio_schamatic.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2672/radio_schamatic.webp" alt="decorative"></source></source></source></picture></p><p>The audio output of the Raspberry-Pi is connected to the input of a small amplifier tolerating a power supply of 3 to 16V and the network is carried by a WiFi dongle.</p><p>It should be noted that :</p><ul> <li>The amplifier is not connected directly to the 5V (otherwise you will hear noise from the CPU). A small regulator (LM7805 adjusted to 4V) and a 250uF capacitor at the output will filter the line cleanly.</li> <li>The potentiometer (P2) allowing to adjust the gain, is also equipped with a switch that will allow us to mechanically cut the power supply when we turn the knob to its minimum.</li> <li>"Ready Indicator" is an LED simply plugged on a GPIO which will be used to indicate if the WiFI network is available.</li> <li>The rotary switch (P1) is connected to 12 GPIOs which have the particularity of being configured by default as PULL-DOWN on the Raspberry-PI.</li></ul><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/untitled.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/untitled.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/untitled.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2672/untitled.webp" alt="decorative"></source></source></source></picture></p><h2>3. Montage</h2><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200635.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200635.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200635.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2672/20151011_200635.jpg" alt="decorative"></source></source></source></picture></p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200832.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200832.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2672/20151011_200832.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2672/20151011_200832.webp" alt="decorative"></source></source></source></picture></p><h2>4. The software</h2><h3>4.1 Raspberry (wheezy) installation</h3><p>Image available here: <a href="https://www.raspberrypi.org/downloads/raspbian/" target="_blank">https://www.raspberrypi.org/downloads/raspbian/</a></p><p>Installation instructions here:<a href="http://https://www.raspberrypi.org/documentation/installation/installing-images/" target="_blank"> https://www.raspberrypi.org/documentation/installation/installing-images/</a></p><p>Wifi configuration: <a href="https://www.raspberrypi.org/documentation/configuration/wireless/wireless-cli.md" target="_blank">https://www.raspberrypi.org/documentation/configuration/wireless/wireless-cli.md</a></p><h3>4.2 Installation of mpc/mpc (webradio player)</h3><pre><code>sudo apt-get install mpd mpc</code></pre><p>the list of mpc commands is available via:</p><pre><code>mpc help</code></pre><p>more info available here: <a href="http://www.musicpd.org/doc/user/">http://www.musicpd.org/doc/user/</a></p><p>Of particular note:</p><p>To add a web radio to the playlist:</p><pre><code>mpc add </code></pre><p>Examples:</p><pre><code>mpc add http://www.radiofeeds.co.uk/bbcradio1.pls # BBCmpc add http:... # Radio Canada</code></pre><p>To add an mp3 (after copying it to the correct directory on the SD card):</p><pre><code>mpc updatempc add </code></pre><h3>4.3 Realization of a script for the selection of the radio station</h3><p>The principle consists in realizing a "unix deamon" that probes the gpios, in order to associate the positions of the station selection button (P1) to a playback position in the playlist.</p><pre><code>#!/bin/sh### BEGIN INIT INFO# Provides: radio_ctl.sh# Required-Start:# Required-Stop:# Default-Start: S# Default-Stop:# Short-Description: Connect GPIO to mpc playlist# Description: Connect GPIO to mpc playlist### END INIT INFOdaemon_NAME="radio_ctl.sh"export PIDFILE=/tmp/radio_ctlPATH="/sbin:/bin:/usr/sbin:/usr/bin"#install#---------#sudo cp radio_ctl.sh /etc/init.d/. && sudo chmod a+x /etc/init.d/radio_ctl.sh#sudo update-rc.d radio_ctl.sh defaults#to uninstall#---------#sudo update-rc.d -f radio_ctl.sh remove. /lib/lsb/init-functionsd_start () { V=0 VOLD=0 echo 23 > /sys/class/gpio/export echo 24 > /sys/class/gpio/export echo 25 > /sys/class/gpio/export echo 12 > /sys/class/gpio/export echo 13 > /sys/class/gpio/export echo 26 > /sys/class/gpio/export echo 19 > /sys/class/gpio/export echo 21 > /sys/class/gpio/export echo 20 > /sys/class/gpio/export echo 22 > /sys/class/gpio/export echo 27 > /sys/class/gpio/export echo 17 > /sys/class/gpio/export while [ true ] do [ `cat /sys/class/gpio/gpio23/value` = "1" ] && V=1 [ `cat /sys/class/gpio/gpio24/value` = "1" ] && V=2 [ `cat /sys/class/gpio/gpio25/value` = "1" ] && V=3 [ `cat /sys/class/gpio/gpio12/value` = "1" ] && V=4 [ `cat /sys/class/gpio/gpio13/value` = "1" ] && V=5 [ `cat /sys/class/gpio/gpio26/value` = "1" ] && V=6 [ `cat /sys/class/gpio/gpio19/value` = "1" ] && V=7 [ `cat /sys/class/gpio/gpio21/value` = "1" ] && V=8 [ `cat /sys/class/gpio/gpio20/value` = "1" ] && V=9 [ `cat /sys/class/gpio/gpio22/value` = "1" ] && V=10 [ `cat /sys/class/gpio/gpio27/value` = "1" ] && V=11 [ `cat /sys/class/gpio/gpio17/value` = "1" ] && V=12 if [ $VOLD != $V ]; then VOLD=$V mpc play $V [ $V = 1 ] && echo "station 1" [ $V = 2 ] && echo "station 2" [ $V = 3 ] && echo "station 3" [ $V = 4 ] && echo "station 4" [ $V = 5 ] && echo "station 5" [ $V = 6 ] && echo "station 6" [ $V = 7 ] && echo "station 7" [ $V = 8 ] && echo "station 8" [ $V = 9 ] && echo "station 9" [ $V = 10 ] && echo "station 10" [ $V = 11 ] && echo "station 11" [ $V = 12 ] && echo "station 12" fi sleep 0.1 done}d_stop () { if [ -e $PIDFILE ]; then kill `cat $PIDFILE` rm -f $PIDFILE fi}case "$1" in start) if [ -e $PIDFILE ]; then log_daemon_msg "Daemon $daemon_NAME already running" log_end_msg $? else log_daemon_msg "Starting system $daemon_NAME Daemon" log_end_msg $? d_start & echo $! > $PIDFILE fi ;; stop) if [ -e $PIDFILE ]; then log_daemon_msg "Stopping system $daemon_NAME Daemon" log_end_msg $? d_stop fi ;; restart|reload|force-reload) d_stop d_start ;; force-stop) d_stop killall -q $daemon_NAME || true sleep 2 killall -q -9 $daemon_NAME || true ;; status) status_of_proc "$daemon_NAME" && exit 0 || exit $? ;; *) echo "Usage: /etc/init.d/$daemon_NAME {start|stop|force-stop|restart|reload|force-reload|status}" exit 1 ;;esacexit 0</code></pre><p>Mea-culpa: This bash script is clearly not the most efficient (pooling at the 10ms of the GPIO) but it has the merit to be simple and to have been written very quickly.</p><h3>4.4 Realization of a script to light a LED when the WiFi network is available</h3><pre><code>#!/bin/sh### BEGIN INIT INFO# Provides: wlan_led.sh# Required-Start:# Required-Stop:# Default-Start: S# Default-Stop:# Short-Description: led on if router answer to ping# Description: led on if router answer to ping### END INIT INFOdaemon_NAME="wlan_led.sh"export PIDFILE=/tmp/wlan_ledPATH="/sbin:/bin:/usr/sbin:/usr/bin"#install#---------#sudo cp wlan_led.sh /etc/init.d/. && sudo chmod a+x /etc/init.d/wlan_led.sh#sudo update-rc.d wlan_led.sh defaults#to uninstall#---------#sudo update-rc.d -f wlan_led.sh remove. /lib/lsb/init-functionsd_start () { echo 18 > /sys/class/gpio/export echo out > /sys/class/gpio/gpio18/direction while [ true ] do ping -t1 -w1 -c1 192.168.1.1 > /dev/null if [ $? = "0" ]; then echo 1 > /sys/class/gpio/gpio18/value else echo 0 > /sys/class/gpio/gpio18/value fi sleep 10 done}d_stop () { if [ -e $PIDFILE ]; then kill `cat $PIDFILE` rm -f $PIDFILE fi}case "$1" in start) if [ -e $PIDFILE ]; then log_daemon_msg "Daemon $daemon_NAME already running" log_end_msg $? else log_daemon_msg "Starting system $daemon_NAME Daemon" log_end_msg $? d_start & echo $! > $PIDFILE fi ;; stop) if [ -e $PIDFILE ]; then log_daemon_msg "Stopping system $daemon_NAME Daemon" log_end_msg $? d_stop fi ;; restart|reload|force-reload) d_stop d_start ;; force-stop) d_stop killall -q $daemon_NAME || true sleep 2 killall -q -9 $daemon_NAME || true ;; status) status_of_proc "$daemon_NAME" && exit 0 || exit $? ;; *) echo "Usage: /etc/init.d/$daemon_NAME {start|stop|force-stop|restart|reload|force-reload|status}" exit 1 ;;esacexit 0</code></pre><p>Again, a Quick'n Dirty script, which lights a led when the Wifi router (here 192.168.1.1) responds to a ping.</p><p>The test is performed every 10 seconds.</p><h2>5 Conclusion</h2><p>Here is a short video that shows the result.</p><div height="315px" width="560px"></div>

Mobile Development

5 min read

How to deliver quality?

<div><div><h2>Architecture with a team</h2><p>Our team had grown rapidly. We had to learn the client's business logic and respect time constraints. Under these circumstances, it was easy to accumulate a technological debt. Over time, we felt that this was becoming an obstacle and that we needed to address this problem before it became more serious. We had to find a way to coordinate several teams to move them in a common direction. That's how our grooming architecture began.</p><p>This workshop takes place a few days before the start of a new sprint. The developer team gets together and looks at the content of the next team sprint. Each of the stories will be read and explained to the team to make sure they understand their objective. Next, the team will look at the architectural requirements. What will be the technical challenges? What part can be made into a reusable component? Do we have time to rework the code to erase a technology debt, and what solution can we implement in the time available? Once these questions have been answered, the work to be done will be clear to each team member. The technical solutions found will come from the common knowledge of the team, resulting in solid solutions.</p><p>To ensure that the work is well coordinated between the teams, our Architecture Owner will participate in each team's workshop. His role is to advise the teams and ensure that the architectural vision of the project is respected. But it remains the role of the team to find the solutions. The Architecture Owner will also have to bridge the gap between the teams. He will have to communicate and explain as needed the solutions that the other teams will implement during the next sprint.</p><p>Finally, following the review of the sprint with the Product Owner, we do an architectural review. The technical work will be presented to the Architecture Owner and the other teams. We present the reusable components that have been created, the patterns that have been introduced, and any other relevant technical information that needs to be conveyed.</p><p>With these new ceremonies, the team is more aware of the architectural work and the technical challenges of the system; both those that have been completed and those that are still to come. </p><h2>Peer Quality Assurance</h2><p>It's never pleasant for a completed story to be reopened by the quality assurance team, and we found it problematic when this happened too often. In addition, the reopened story could be from a previous sprint because the QA team was overwhelmed with work up to that point. Our solution to this situation was to introduce peer quality assurance.</p><p>When a developer has finished his story, the code review has been accepted and he has done the necessary testing, but before he pushes his changes in the source code, he will have to give them to another developer. This second developer will have to compile the changes on his workstation. This prevents the compilation of the source code from being broken by the changes, either by a file that has been forgotten to be added to the development code, or by a configuration on the development workstation that does not conform to that of the compilation machine. Once the compilation has been successfully completed, the second developer tests the story to ensure that there are no flaws or omissions in the business logic. When the second developer is satisfied, only then can the development branch be put into the source code.</p><p>With this extra step, many of the problems that would have been made visible by the automated compilation machine or by the QA team are found by the second developer. These problems are found and fixed more quickly and the team's confidence in a finished story is increased.</p><h2>Team Quality Assurance Session</h2><p>It's hard to be satisfied as a team with the work we do when the sprint review seems to turn into a debugging session. But a story delivered in the first week of a sprint can sometimes be impacted by changes made near the end of the sprint. That's why we introduced team quality assurance sessions. </p><p>We started these sessions on the eve of a delivery, to intensively test everything that had been done as a job. Every time we did such a session during a sprint, the number of defects or business rules not followed was higher than in other sprints. By introducing this session at the end of each sprint, two days before the review, we can clearly see the work done and the problems that might have escaped us during the sprint. The next day, we take the time to make any corrections that can be made. If a major problem has been found in a story and the team considers it unacceptable to deliver it as is, the story can be taken out of the source code and the patches will have to be made at the beginning of the next sprint. This can delay its delivery, but we make sure the quality is there.</p><p>This allows the team to avoid any unpleasant surprises during the review and also increases confidence in the work that has been done. A team session also allows for better communication and understanding of what other developers have done as work in the sprint.</p><h2>A cumbersome process?</h2><p>We consider each of our teams to be made up of reasonable people who have enough judgment to know when it is possible to skip a step. Our methodology is a guide, not a rigid structure. Correcting a syntax error does not require the same effort as rewriting the core logic of the system. Our teams are dedicated in their work and they do not want to give our clients a poor quality deliverable. So we maintain flexibility in the process. A team member may at any time not take a step if he or she feels it is reasonable to omit it. This avoids slowing down development unnecessarily.</p><p>In spite of this, since the implementation of these steps in our work methodology, we have questioned the time involved. Is it too cumbersome and costly?</p><p>What we have found is that it affects the velocity of each of the teams and that the work takes a little longer. On the other hand, the quality is greatly improved. The stabilization period before a delivery has been much shorter and the teams have a greater level of confidence in the deliverables.</p><p>After delivery, the customer finds fewer defects, and the severity and impact of these problems are less, avoiding the need to make quick deliveries for emergency fixes.</p><p>It is easier to predict the effort required during delivery development. We reduce the amount of work required for delivery and post-delivery. Higher code quality makes the work easier, making it easier for teams to work together and evaluate new features.</p><p>In the end, the team and the client are confident in the deliverable that is delivered. Also the evaluations are closer to reality and there will be less maintenance effort after a delivery.</p></div>

Culture

5 min read

Counting the homeless to improve their lives

<div><div><p>On a particularly balmy spring evening, a team of volunteers gathered to lend a hand in the second annual “Je Compte Montréal”, an initiative to count the number of homeless persons on the Island of Montreal. The event, organized by the <i>Mouvement pour mettre fin à l’itinerance à Montréal</i> (“Movement to End Homelessness in Montreal”), was in its second year. </p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-02.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-02.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-02.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2524/count-montreal-02.webp" alt="Équipe Spiria." title="Équipe Spiria."></source></source></source></picture></p><p>The meeting point assigned to our team was the Downtown YMCA at 1440, Stanley Street, where an army of volunteers was hard at work welcoming other volunteers, team leaders, and teams.</p><p>The organizers had thought of everything, and in the organized chaos, we sat around a table to decide how to canvas our assigned sector. Our goal was to come into contact with every homeless person present in the area delimited by Mansfield, Saint-Catherine, Jeanne-Mance and Rene-Levesque streets, in downtown Montreal.</p><p>At first, the task seemed Herculean, and it is with some trepidation that our group headed out to Jeanne-Mance Street, at the other end of our sector. Our approach was to start at the furthest point from the Y and to work our way back, approaching passers-by on the way. The art of accosting strangers on the street doesn’t come naturally to most of us, and it took all our courage to set ourselves to our task.</p><p>But the question is, what, exactly, is homelessness? Is that guy over there, sitting on a bench, with a cellphone and a beat-up suitcase, actually homeless? What about that other guy eyeing us warily and slouching away? Or what about that couple kissing on the park bench, with their backpacks and large beers? Are they runaways, or tourists?</p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-02b.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-02b.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-02b.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2524/count-montreal-02b.webp" alt="Équipe Spiria." title="Équipe Spiria."></source></source></source></picture></p><p>We were supposed to comb every last street, lane and back alley between 8 and 11pm; but as early as 7pm, we were sitting at the Place des Festivals, discussing the best way to proceed and eyeing passers-by.</p><p>We launched into our work with some excitement and infectious energy. Accosting a homeless person is no easy feat — especially since half the time, they refused to speak to us, walked away or asked us to leave. </p><p>We were supposed to give them a 5$ gift card at the end of our conversation, in exchange for their time, but maybe we would have had better luck if we had pulled it out as we were approaching them. The questionnaire had over twenty questions over three pages. Some of the questions had sub-questions, depending on the answer. They dealt with place of residence, periods of homelessness, and stays in hospital or help centres, all of which had to be answered. Some questions required the selection of just one answer, while others allowed several answers. In short, the questionnaire was difficult to use, and an electronic version loaded on a tablet would have made our task much easier. The hardest questions to ask were the ones about gender and sexual orientation. No question dealt with drug use.</p><p>The initial discomfort of dealing with homeless persons quickly turned into sheer energy, to the point that our group of six Spirians often split up into two separate teams — never the same twice — to cover the territory.</p><p>Having worked our way back to the Western end of our sector, we decided to give it a second pass, to see if the urban fauna had changed. Imagine our surprise when we discovered that at store closing time, the streets that had seemed so familiar and safe turned into the hunting grounds of an entirely new type of fauna!</p><p>While homeless persons were few and far between during our first sweep, we were now a minority on Saint-Catherine Street’s urban crucible. Homeless persons were everywhere, carrying all their worldly possessions in their backpacks, faithful dog by their side, the outcasts of society looking for love, or just their next fix. The street was heating up, as the night was cooling.</p><p>At the end of the evening, tired and sore-footed, we decided to call it a day. That’s when I learned that one of us would be sleeping at the office, on the floor, in a sleeping bag. I thought it was pretty funny, but only because it was not a permanent predicament.</p><p>I’d like to thank the brave and tactful people who volunteered to take part in this census of homeless persons in Montreal: Isabelle, Guy, Jean, Stephane and Cyril. I’d also like to thank Spiria, which supports us and makes this kind of initiative possible. </p><p><picture><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-03.400x0.webp" media="(max-width: 599px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-03.760x0.webp" media="(max-width: 999px)"><source type="image/webp" srcset="https://mirror.spiria.com/site/assets/files/2524/count-montreal-03.1039x0.webp" media="(min-width: 1000px)"><img src="https://mirror.spiria.com/site/assets/files/2524/count-montreal-03.webp" alt="Je compte MTL 2018." title="Je compte MTL 2018."></source></source></source></picture></p><h2>To conclude, a few personal reflections:</h2><p>What struck me, when looking at homelessness up close, was its diversity. So many lives upended by mental illness, derailed by toxic environments and successive foster homes, or poisoned by the insidious effects of substances used to dull physical or emotional pain. </p><p>There seems to be no one cause of homelessness. Each story is unique, and there is no miracle solution to this multifaceted problem. Of course, initiatives like last week’s can give a face to the problem, but it is only a tool to assess the problem, not a cure. It remains to be seen whether it will be followed up by concrete action.</p><p>As for me, I’ll never be able to look at homeless persons the same way again. What can we do about it? We can start by listening to them. Homeless persons are only bruised and battered humans trying to find peace with what little they have.</p></div>

Strategy

5 min read

The cost of legacy systems