Spiria Tech Recap - № 380 - Raspberry Pi 5, Amazon+Anthropic, macOS Sonoma, data compression, etc.

Raspberry Pi 5 arrives at the end of October

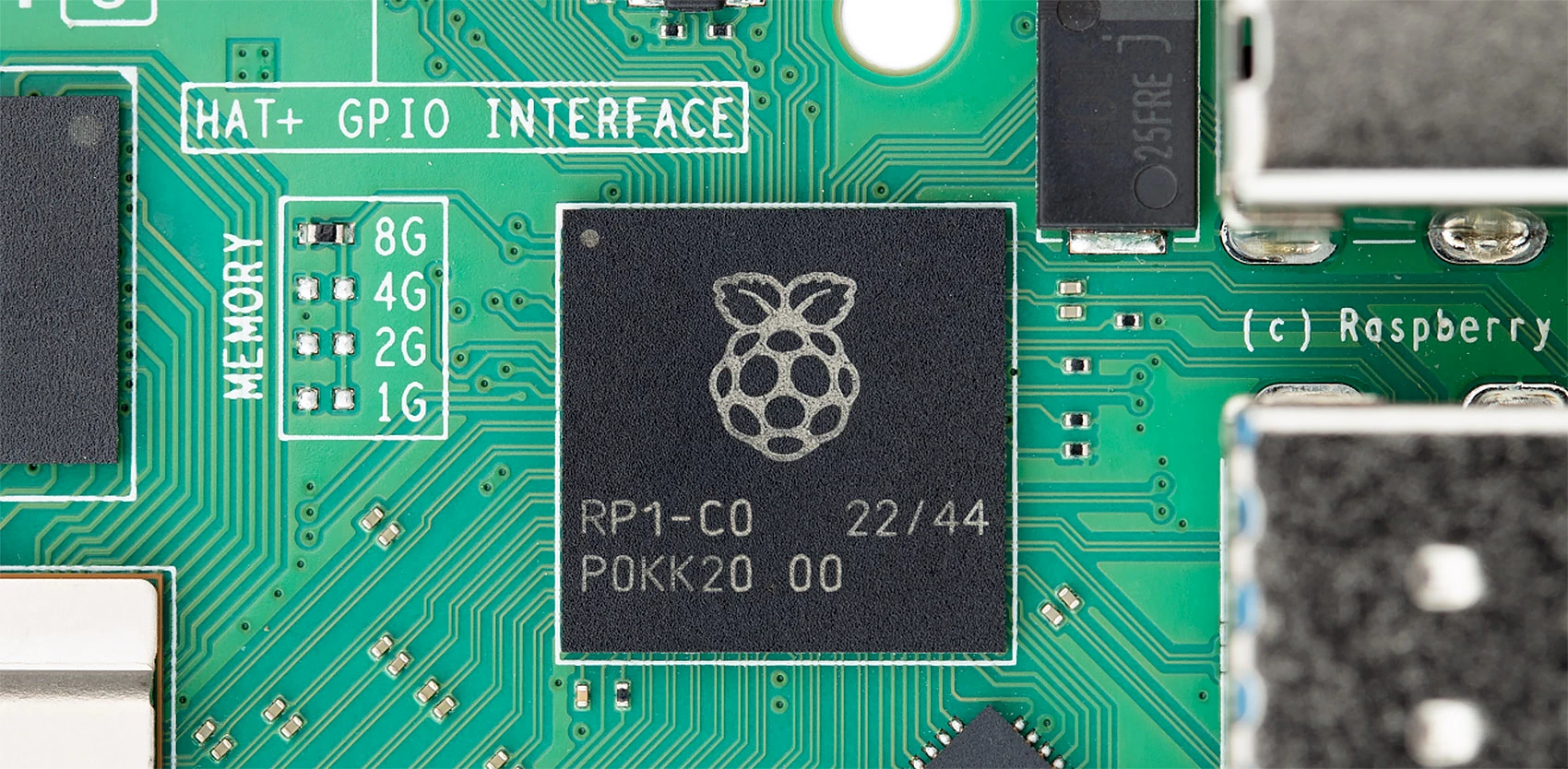

Raspberry Pi 5. © Raspberry Pi Ltd.

Raspberry PI CEO, Eben Upton, announced the release of its 5th gen, single-card nanocomputer with ARM processor. The Raspberry Pi 5, which will run you 60 USD for 4GB of RAM or 80 USD for 8GB, comes with new functionalities, more than doubles the speed of its predecessor, and is the first Raspberry Pi equipped with mostly in-house silicon. While its core is still a Broadcom 64-bit quad-core Arm processor (a Cortex-A76 running at 2.4GHz), three new custom-designed chips have been specifically designed for it: an updated and faster GPU, the VideoCore VII, capable of driving two HDMI 4K/60Hz displays simultaneously; the RP1, which handles most of the input/output; and the DA9091, an integrated power-management component. The chips handling the ethernet and wireless networks (Wi-Fi 802.11ac, Bluetooth 5.0 and Bluetooth Low-Energy) stay the same as in the Pi 4.

At first glance, the Raspberry Pi 5 looks similar to its predecessors. As with all the company’s other flagship products, the Pi 5 is manufactured at the Sony UK Technology Center in Pencoed, South Wales. Available new accessories include a case with a small fan (10 USD), an active cooling system (5 USD), a 27W USB-C power adapter, and a small, rechargeable lithium-manganese battery to power the Raspberry Pi 5’s real-time clock (RTC) when the main power supply is disconnected (5 USD). The Pi 5 is available for preorder now from several approved resellers, and the first units should ship by the end of October.

Updated case. © Raspberry Pi Ltd.

⇨ YouTube, “Eben Upton introduces Raspberry Pi 5”

⇨ YouTube, “Introducing Raspberry Pi 5”

⇨ The Verge, Emma Roth, “The Raspberry Pi 5 is finally here.”

⇨ Ars Technica, Kevin Purdy, “Raspberry Pi 5, with upgraded everything, available for preorder today.”

2023-09-28

Windows and Surface team-lead joins Amazon

Panos Panay unveils the Surface Pro in Shanghai in 2017. © Microsoft.

Panos Panay, in charge of Surface’s hardware line from the very beginning and of Windows development since 2020, suddenly left Microsoft about ten days ago, just before the presentation event for the new Surface products. His role has been filled by Yusuf Mehdi, director of consumer marketing, a face we know from his presentations of most of the company’s major announcements related to generative AI.

Today we learn that Panay is joining Amazon to take over the reins of the hardware division, replacing David Limp, who, last August, announced his intent to leave Amazon. “As a strong product builder and inventor who has deep experience in both hardware and integrated services, Panos will be a great addition to our devices and services organization moving forward,” writes Amazon CEO Andy Jassy in a note to employees. Panay will begin in late October alongside Limp, who will on-board him for the next few months. Limp is not retiring, however. In December, he will take over at Blue Origin, the space company owned by Amazon’s former CEO Jeff Bezos.

⇨ Ars Technica, Andrew Cunningham, “Panos Panay, leader of the Surface and Windows teams, is leaving Microsoft.”

⇨ The Verge, Emma Roth, “Panos Panay is leaving Microsoft for Amazon.”

2023-09-28

AI: Amazon pulls out the big guns

Amazon+Anthropic.

On Monday, Amazon announced that it would invest up to 4 billion USD in Anthropic, the AI start-up known mainly for its Claude chatbot and founded by Dario and Daniela Amodei, OpenAI transplants. This investment clearly signals that Amazon won’t be left behind in the AI arms race. As part of the agreement, Amazon will take a minority share in the company and commits to incorporating Anthropic technology in various products, in particular the AWS Bedrock service for the creation of AI applications. Anthropic will also use Amazon chips to build, train and deploy future AI models and promises to use Amazon Web Services (AWS) as its main cloud-service provider.

Anthropic’s Claude and Claude 2 chatbots, which are based on large language models, are similar to OpenAI’s ChatGPT and Google’s Bard. They can translate text, write code and answer various questions. Anthropic says that its model is safer and more reliable because it is based on a set of principles intended to make it “helpful, honest and harmless”.

⇨ The Verge, Jess Weatherbed, “Amazon will invest up to $4 billion into OpenAI rival Anthropic.”

2023-09-25

Ars Technica gives macOS 14 Sonoma the fine tooth-comb

MacOS 14 Sonoma. © Apple.

As with every major release, Ars Technica scrutinized all the features of the new MacOS version. However, this time, the article runs to a “mere” 10,000 words. Past reviews have run much longer — sometimes over 20,000 words. The lesser verbiage speaks volumes: the Sonoma version of MacOS doesn’t really have much to say for itself. Andrew Cunningham writes that though there’s not much new “for any one kind of Mac user”, there is still “a little something for everybody.”

Little somethings include pretty screen savers and wallpapers, widgets that could finally be potentially useful with their more solid implementation, and Apple’s password manager enriched with features that should make it more functional for the layman. But other than that, there’s not much to sink your teeth into. Cunningham deplores that Mac Intels are increasingly side-lined, and that those who stay in the game are deprived of functionalities reserved for Macs with in-house processors. This version of MacOS also features the Game Porting Toolkit, which won’t be of much use for the time being, but should eventually facilitate the arrival of interesting games on the platform.

⇨ Ars Technica, Andrew Cunningham, “macOS 14 Sonoma: The Ars Technica review.”

2023-09-26

AI gives compression algorithms a run for their money

Chinchilla. © iStock.

In an arXiv research paper titled “Language Modeling Is Compression”, researchers used Chinchilla 70b, a large language model (LLM) by Deepmind, to compress images from the ImageNet database without any losses. They compressed images to 43.4% of original size, beating the PNG algorithm that compressed the same data to 58.5%. As for audio, Chinchilla compressed the samples of the LibriSpeech audio data set to just 16.4% of their raw size, substantially outdoing FLAC’s compression of 30.3%. These results suggest that though Chinchilla 70B was mainly trained to deal with text, it is surprisingly effective in compressing other types of data, often outperforming algorithms specifically designed for this task. This opens the door to new, original applications for large language models.

That being said, an LLM requires much more computing power, energy and memory than lossless compression algorithms such as FLAC or PNG, which were designed to be lightweight and very fast. Chinchilla, with its 70 billion parameters, is not about to replace our trusty algorithms in many common applications.

⇨ Ars Technica, Benj Edwards, “AI language models can exceed PNG and FLAC in lossless compression, says study.”

2023-09-28