Spiria Tech Recap - № 371 - Nvidia H100 NVL, ChatGPT on MS-DOS, Framework 16, GitHub Copilot X, etc.

The RAMbo of GPU cards

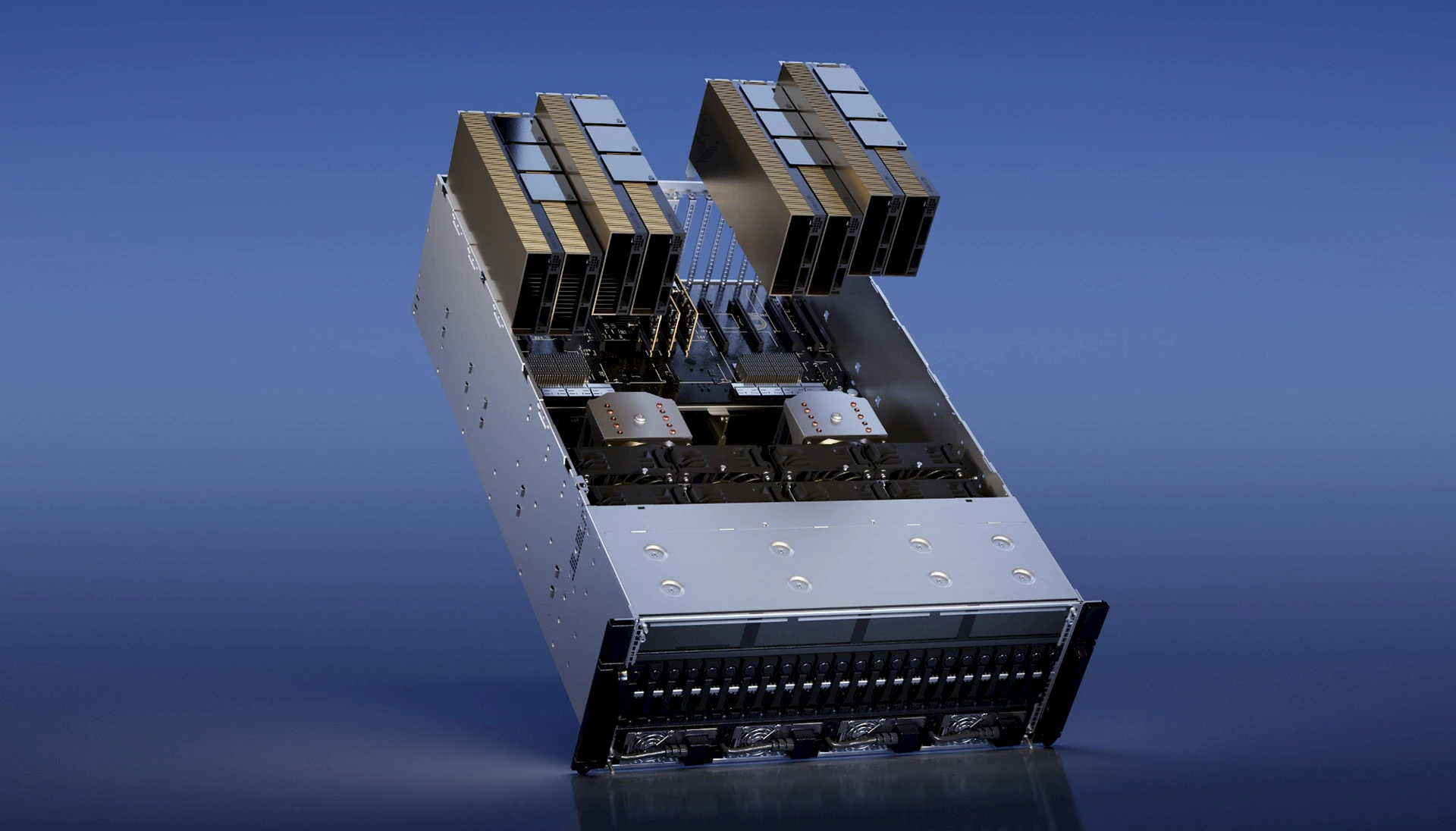

H100 NVL. © Nvidia.

As large language models grow in popularity, GPU manufacturers have started designing products specifically for these LLMs, which require lots of memory and computing power. Nvidia built the H100 NVL, consisting of two PCIe H100 cards joined by a bridge — NVLink 4 connections. It’s equipped with 188GB of HBM3 RAM, which is an extraordinary amount of memory. What’s more, the card’s overall bandwidth is 7.8TB/second. Nvidia says that the H100 NVL offers twelve times the GPT3-175B (175 billion parameters) inference throughput of a last-generation HGX A100. Since it’s dual-card, each H100 NVL occupies two slots. These cards will start shipping in the second half of 2023. The company hasn’t mentioned a price, but we expect it will cost in the five figures. The huge growth in the use of LLMs is triggering something like a gold rush for the server GPU market.

Also in the news, Michael Kagan, Nvidia’s chief technology officer, recently told The Guardian that “cryptocurrencies add nothing useful to society.” “I never believed that [crypto] is something that will do something good for humanity. You know, people do crazy things, but they buy your stuff, you sell them stuff. But you don’t redirect the company to support whatever it is.” Nvidia never really embraced the crypto community. As a matter of fact, the company set up a system to artificially limit the use of its graphic cards to mine the popular Ethereum cryptocurrency, to make sure that its products remained available for target clients such as AI researchers and gamers.

⇨ AnandTech, Ryan Smith, “NVIDIA Announces H100 NVL - Max Memory Server Card for Large Language Models.”

⇨ Tom’s Hardware, Jarred Walton, “Nvidia Is Bringing Back the Dual GPU... for Data Centers.”

2023-03-21

A ChatGPT meets MS-DOS

IBM 5155. Peter Böhm - CC BY 3.0.

Yeo Kheng Meng is a retrocomputing hobbyist based in Singapore with a Slack client for Windows 3.1 to his credit. He just released a ChatGPT client for MS-DOS that can run on a 4.77 MHz IBM PC, providing a unique way to converse with OpenAI’s popular language model. Running ChatGPT on early-1980s computers has proved quite the challenge, as much for the lack of native networking capability as for these machines’ limited power (Yeo used a 1984 IBM laptop, with an Intel 8088 4.77 MHz processor and 640KB of memory). The developer worked with Open Watcom C/C++, a modern compiler running on Windows 11 that can target 16-bit DOS platforms. To add a network layer, he went with the PC/TCP Packet Driver, a network API invented in 1983, and a library of TCP/IP utilities for DOS developed by Michael B. Brutman. Last but not least, ChatGPT APIs require encrypted HTTPS connections, yet there is no library that can handle them on MS-DOS. Yeo therefore had to create an HTTP-to-HTTPS proxy that can work on a modern computer and translate requests and responses between the MS-DOS client and ChatGPT’s secure API. On the subject of this tinkering, Yeo writes in his blog: “Purists may not like this solution but this is the best I can do with my abilities in a reasonable amount of time.”

⇨ YouTube, “DOS ChatGPT client on a 1984 IBM Portable PC 5155”

⇨ Ars Technica, Benj Edwards, “Hobbyist builds ChatGPT client for MS-DOS.”

2023-03-23

Framework launching a 16-inch laptop

Framework 16. © Framework.

After the success of its 13-inch modular laptop (which can be repaired, modified and upgraded by its user, and which has just received a 13th generation Intel processor upgrade option, as well as a brand new AMD Ryzen option), Framework announces the upcoming release of a 16-inch laptop. This larger-screen model can accommodate more powerful processors, specialized GPUs and a range of different keyboard modules, all with the same commitment to repairability and upgradeability as with the original Framework Laptop. A removable expansion bay placed at the rear of the notebook is an attractive feature that accommodates GPU modules should you want more graphics power than what the integrated unit offers. These GPU modules use eight lanes of PCIe bandwidth and can be plugged and unplugged without modifying anything else on the laptop. A very interesting concept that will need more time to develop. Preorders won’t open before the spring and deliveries won’t begin before end of 2023.

⇨ Ars Technica, Andrew Cunningham, “Framework’s first gaming laptop features upgradeable GPUs, swappable keyboards.”

2023-03-23

GitHub Copilot X

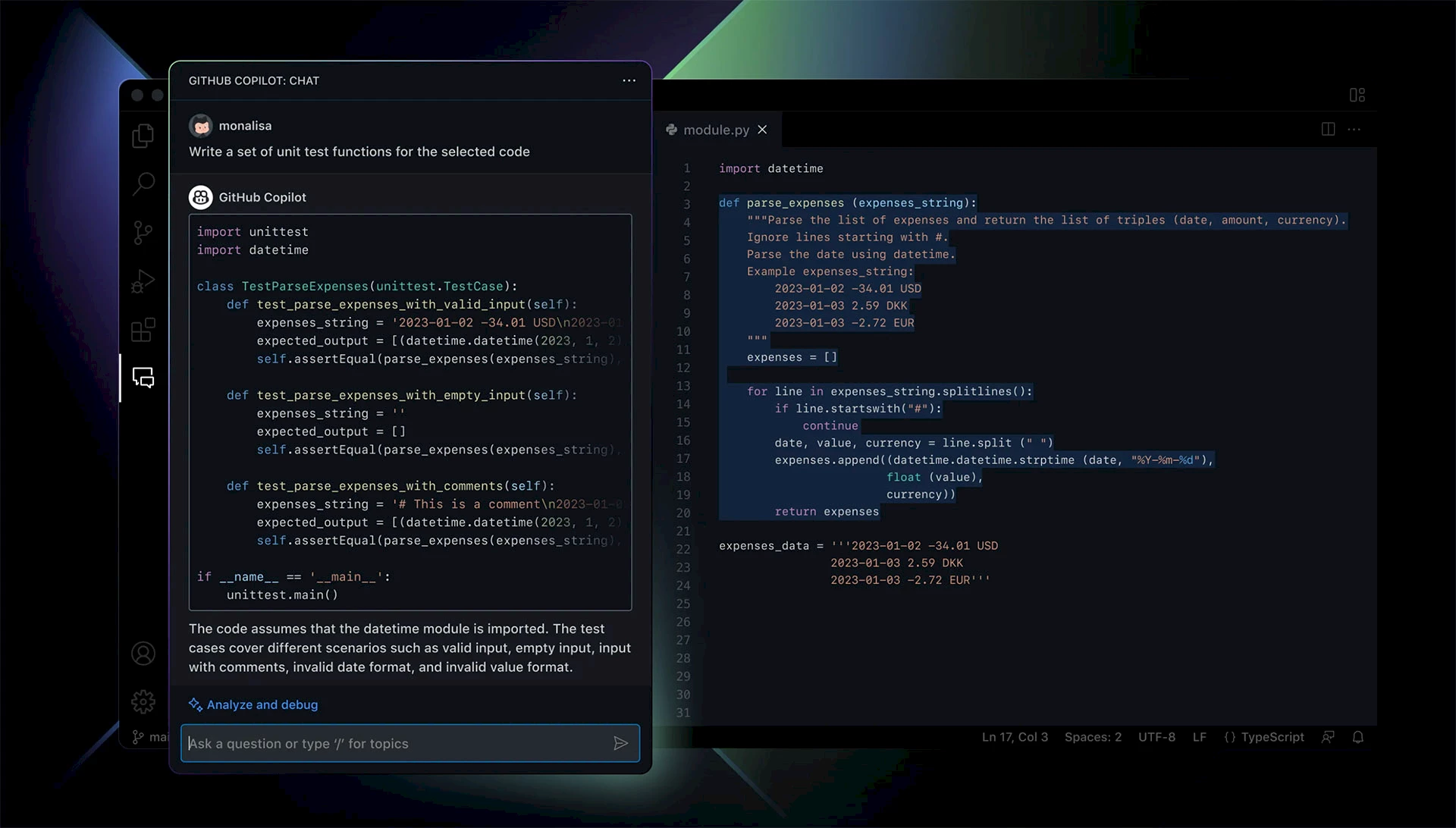

Copilot X. © GitHub.

GitHub, owned by Microsoft, is making large strides to overhaul its Copilot system to integrate OpenAI’s GPT-4 model. This new version of the programming assistance tool brings voice support and a chatbot to the integrated development environment (IDE). The chatbot can recognize and explain code, recommend changes, and fix bugs. The tool’s new potential goes far beyond simple smart code autocompletion and brings it closer to functioning as a true coding assistant, like Microsoft’s new Copilot for Microsoft 365 Apps. “It’s a similar idea to the Bing chat or the Microsoft Edge sidebar, but bringing that into the developer workflow,” says Thomas Dohmke, GitHub CEO. “I think for developers the difference between GitHub Copilot and Bing is that Copilot is focused on code. You can ask it to fix your code, ask it to explain the code to you, and you can actually ask it to write a unit test.” Voice support means you won't even need a keyboard to code. With the “Hey, GitHub!” feature, you voice-command Copilot to answer questions or suggest lines of code. Also useful, the wizard can find you up-to-date answers in all React, Azure, and MDN documentation. This new Copilot X system will initially only be available in Microsoft's Visual Studio and Visual Studio Code applications, but GitHub plans to expand it to other IDEs in the future.

Copilot had a big role in a million developers’ productivity, helping them code up to 55% faster, according to GitHub. Thomas Dohmke thinks this will increase further with these new chat features, and that AI assistants like Copilot are going to change how people learn to code in the future.

⇨ YouTube, “GitHub Copilot X”

⇨ The Verge, Tom Warren, “GitHub Copilot gets a new ChatGPT-like assistant to help developers write and fix code.”

2023-03-22

Ever greener Microsoft

© CarbonCapture.

Microsoft, a major supporter of emerging technologies that remove carbon dioxide emissions from the atmosphere, has agreed to purchase carbon removal credits from Los Angeles-based startup CarbonCapture. This company developed a modular technology that sucks CO2 from the atmosphere and stores it underground, preventing this greenhouse gas from contributing to climate change. Microsoft has set a goal to become “carbon negative” by 2030, meaning it would remove more CO2 pollution from the atmosphere than it generates through the use of fossil fuels. This agreement helps Microsoft reach its carbon-neutral goal, while backing the growth of the direct-air capture industry as a whole. CarbonCapture plans to capture and store 10,000 tons of CO2 per year after rolling out its first modules in Wyoming next year. CarbonCapture expects to be able to remove 5 million tons of carbon dioxide per year by 2030.

⇨ The Verge, Justine Calma, “Microsoft inks another deal to capture and store its carbon emissions underground.”

2023-03-22