The RAMbo of GPU cards

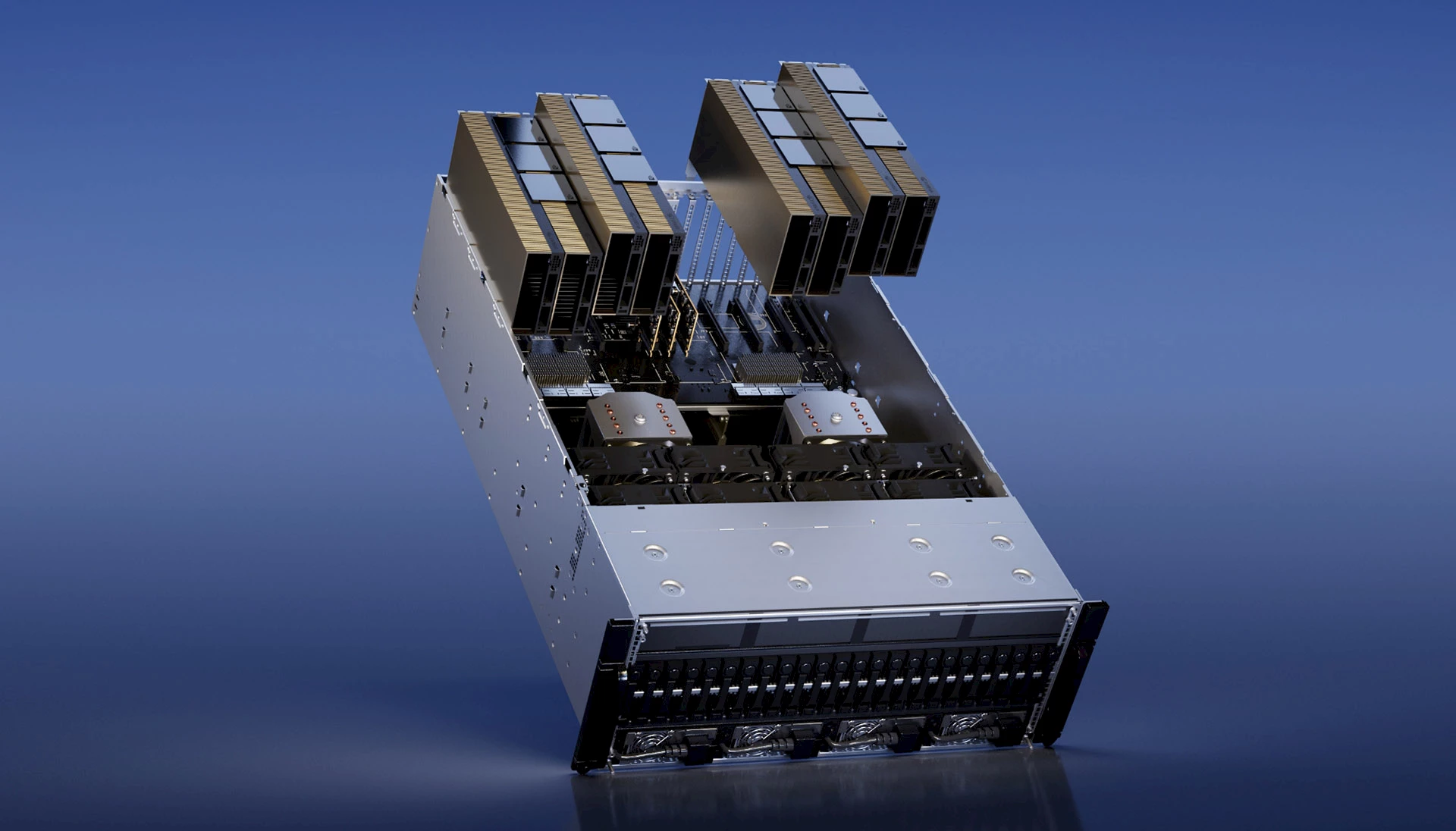

H100 NVL. © Nvidia.

As large language models grow in popularity, GPU manufacturers have started designing products specifically for these LLMs, which require lots of memory and computing power. Nvidia built the H100 NVL, consisting of two PCIe H100 cards joined by a bridge — NVLink 4 connections. It’s equipped with 188GB of HBM3 RAM, which is an extraordinary amount of memory. What’s more, the card’s overall bandwidth is 7.8TB/second. Nvidia says that the H100 NVL offers twelve times the GPT3-175B (175 billion parameters) inference throughput of a last-generation HGX A100. Since it’s dual-card, each H100 NVL occupies two slots. These cards will start shipping in the second half of 2023. The company hasn’t mentioned a price, but we expect it will cost in the five figures. The huge growth in the use of LLMs is triggering something like a gold rush for the server GPU market.

Also in the news, Michael Kagan, Nvidia’s chief technology officer, recently told The Guardian that “cryptocurrencies add nothing useful to society.” “I never believed that [crypto] is something that will do something good for humanity. You know, people do crazy things, but they buy your stuff, you sell them stuff. But you don’t redirect the company to support whatever it is.” Nvidia never really embraced the crypto community. As a matter of fact, the company set up a system to artificially limit the use of its graphic cards to mine the popular Ethereum cryptocurrency, to make sure that its products remained available for target clients such as AI researchers and gamers.

⇨ AnandTech, Ryan Smith, “NVIDIA Announces H100 NVL - Max Memory Server Card for Large Language Models.”

⇨ Tom’s Hardware, Jarred Walton, “Nvidia Is Bringing Back the Dual GPU... for Data Centers.”

2023-03-21